”There’s a new kind of coding I call ‘vibe coding’, where you fully give in to the vibes, embrace exponentials, and forget that the code even exists.” – Andrej Karpathy

Something’s changing in the way we build software. It’s not just new tools or faster frameworks, it’s a shift in what is possible to be achieved with the same resources. Teams are moving faster, thinking differently and achieving a fast output rate of software development that allow for startups to deliver MVP versions of their products to clients in weeks if not days in some cases. With all this output speed there will be more room for creativity, intuition and even fun.

This change is going to require a new mindset where flow and speed matter as much as process, where the best ideas often come from moments that don’t fit neatly into a sprint planning session. This isn’t just disruption by technology; it’s a deeper shake-up in how we write code and design software systems. And it’s already happening and we will start to see this outburst of more personalized and niched solutions flood the market soon.

Introduction

A lot has been said about the state of coding with AI and vibe coding. Even YCombinator, the reputed american accelerator, warns in this recent podcast about the risk of becoming obsolete if software companies don’t embrace AI-assisted development.

But what is it all about? And how does it work when we put it to the test and try to create an actual application using it? Is the quality just hype, or is it just a skill issue of those who criticize it? Let’s dive in and get some insight into how things went for us.

Our Experimentation

Motivation

Our customers often face a challenging choice: should they invest in a custom-built solution tailored to their exact needs, or go with an off-the-shelf product that covers most of the bases but might not solve their problem perfectly? It’s not just a technical decision, it involves budget, timing, and commercial strategy. Because at the end of the day, cash is the bloodline of a company and every company must keep a close eye on both costs and revenues. Making the right choice means balancing long-term value with short-term constraints. And that’s where we help our clients.

In a world of limited resources, tight timelines, and relentless competition, speed is everything.

A faster, more reliable way to build software can be a game-changer.

That’s why we’re embracing AI-driven development to accelerate prototyping, boost flexibility, and cut the usual overhead.

With AI, companies can:

-

Move faster

-

Test ideas more efficiently

-

Deliver value sooner

It’s not just about changing how we code. It’s about transforming how companies interact with customers, adapt to feedback, and evolve their products at speed.

So, we decided to put the hype to the test. We picked a pilot project and committed to building it entirely by riding “the vibe”, fully powered by AI, creativity, and lean execution.

Let’s see where it took us.

Goal of the project

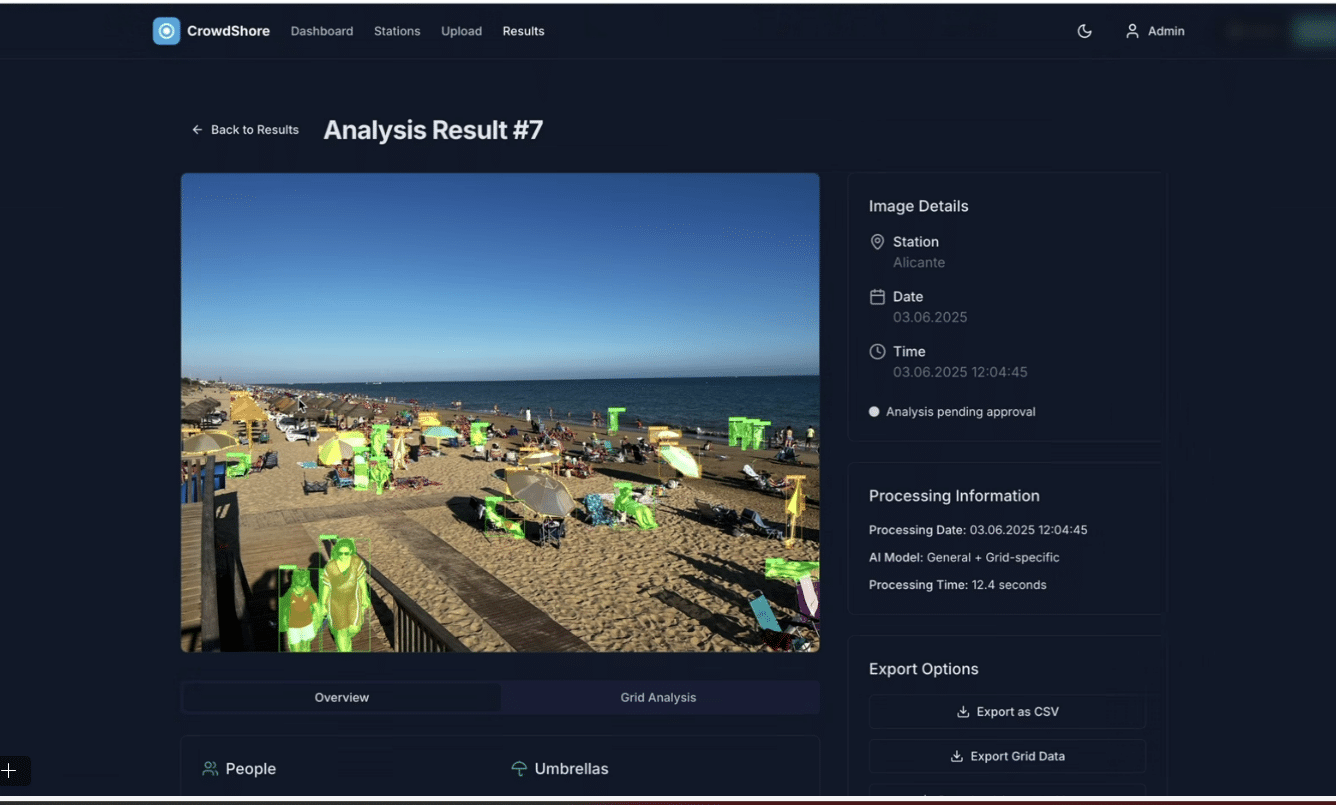

The goal of our project was to create a platform that allows users to upload images taken from specific locations on selected beaches. Using computer vision, the platform analyzes these images to provide valuable insights into human presence and the distribution of objects in the area.

To achieve this, we built the application with a modern tech stack: React with TypeScript for the frontend, FastAPI (Python) for the backend, and SQLite3 as the database. The entire system is deployed within a Docker container to ensure portability and ease of deployment.

Setup

In our approach, we explored several tools during development, including Windsurf, Cursor, Lovable, and Anthropic. However, in this article, we focus specifically on our experience using Cursor and Claude 3.7.

We used the Pro version of Cursor, which provided us with sufficient Agent actions to carry out the tasks required for the project.

From our experience, the frontend code generated in Cursor didn’t meet our quality expectations. To address this, we turned to V0 by Vercel, a tool designed specifically for React and TypeScript. V0 impressed us with its ability to generate dynamic and well-structured designs right from the start.

Another highlight of our frontend development was the automatic inclusion of a dark mode option—a feature often considered a “nice-to-have” but typically left for the later stages of a project. In this case, it was integrated from the beginning, saving us time and enhancing the user experience.

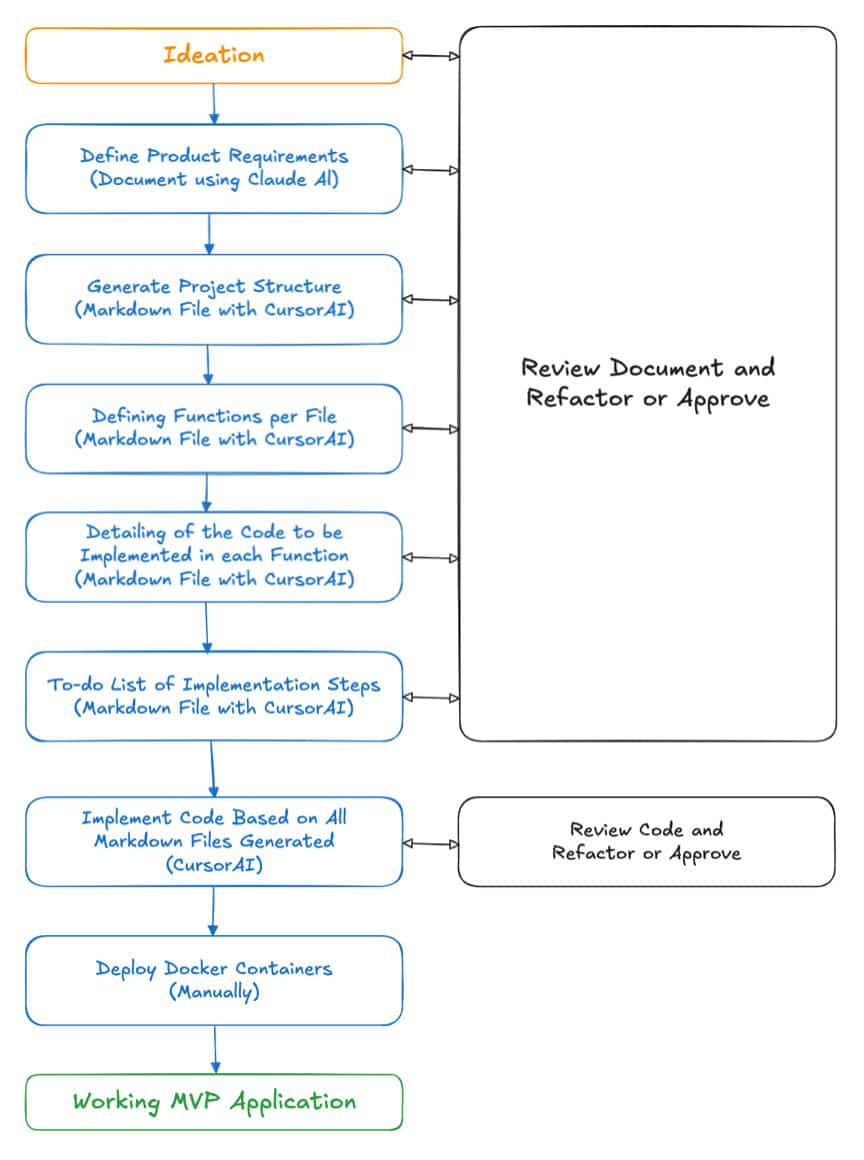

Process followed

To develop a comprehensive Product Requirements Document (PRD), we used Claude Chat to generate a detailed specification based on our initial functional goals. The application was expected to include user authentication, image upload capabilities, people detection via image analysis, and an admin dashboard to display the results.

Once the PRD was finalized and reviewed, we used it as the foundation for development and imported it into Cursor to guide the implementation.

During our initial iterations with Cursor, we made a common mistake: we submitted a complete prompt and expected the large language model (LLM) to handle the entire codebase accurately. While the LLM quickly produced a functional first version of the application, issues emerged when we attempted to scale the project—adding new features or fixing those that didn’t work as expected proved challenging. This highlighted the limitations of relying solely on the LLM for complex or evolving codebases.

The Challenges

This is where the real challenges began to surface. When we asked the tool to fine-tune or extend features, the AI often revisited and altered parts of the code that had already been resolved—sometimes reintroducing bugs, duplicating functions, or creating redundant and conflicting logic. The result was a progressively chaotic and inconsistent codebase.

But why does this happen? And is there a way to prevent it?

The root of the issue lies in the context window of modern large language models (LLMs), which is typically around 250,000 tokens. While that may sound sufficient, it quickly becomes limiting once the AI has to juggle a frontend, a backend, and a growing chat history. Once the model runs out of context, it simply “forgets” parts of the codebase. As the saying goes, “out of sight, out of mind”—and for the AI, anything outside its current context might as well not exist at all.

The Solution

Vibe coding and AI-assisted IDEs can significantly accelerate development, especially when building prototypes and MVPs.

However, AI assisted IDE does not solve yet complex software architecture challenges. Human Expert insights are still essential. A seasoned software developer must ensure that any solution is scalable, secure, performant, and built following best practices. The landscape is evolving rapidly, and at Cactus, we are keeping a very close eye on these trends.

We like to think of our AI-assisted coder as a fearless junior developer—it boosts speed, scales our efforts, and helps us deliver faster. And yes, we have definitely decided to ride this wave!

Our application was successfully deployed thanks to the oversight of our senior development team, who thoroughly reviewed the codebase and requested corrections where needed—even in areas where we encountered difficulties, such as with Docker. Ultimately, this project became a valuable learning experience, teaching us how to effectively guide AI using the tools available today. The key takeaway? It’s absolutely possible to build solid applications with AI—as long as knowledgeable humans are involved in the process.

Much has been said about AI not yet being ready to code independently, and that’s still true. However, with the right approach and thoughtful engineering, AI can dramatically enhance your productivity and output quality. By following a disciplined, step-by-step method—yes, even if it’s a bit repetitive—you can get far better results than those who rely on ad hoc prompting and hope for the best.

If you’re curious about how vibe coding can help accelerate your prototype, validate your use case, or assess market potential, we’d love to hear from you. Reach out through our channels, and let’s explore how AI-assisted development can work for your team.

Yet Unsolved Drawbacks

One of the major challenges we faced involved working with specific APIs or tools for which the LLM had outdated training data—Docker being a prime example. The model frequently generated incorrect or deprecated configurations, and after multiple failed attempts to get something functional, we ultimately had to rewrite the entire Docker implementation manually.

This experience highlights an important reality about the current state of “vibe coding” with AI. Working with an LLM often feels like collaborating with an enthusiastic and fearless junior developer—eager to help, but lacking the discipline and caution of a seasoned engineer. If left unchecked, it will push untested changes to production, break the entire codebase, and force-commit without hesitation or remorse.

This makes the role of a senior developer more critical than ever. While AI can accelerate development, it also introduces a new kind of risk: the ease of deploying seemingly functional code that lacks proper validation, security, or adherence to best practices. What used to be difficult due to a lack of technical know-how is now deceptively easy to build—and potentially dangerous if not carefully reviewed.

Conclusion

Our application was successfully deployed thanks to the oversight of our senior development team, who thoroughly reviewed the codebase and requested corrections where needed—even in areas where we encountered difficulties, such as with Docker. Ultimately, this project became a valuable learning experience, teaching us how to effectively guide AI using the tools available today. The key takeaway? It’s absolutely possible to build solid applications with AI—as long as knowledgeable humans are involved in the process.

Much has been said about AI not yet being ready to code independently, and that’s still true. However, with the right approach and thoughtful engineering, AI can dramatically enhance your productivity and output quality. By following a disciplined, step-by-step method—yes, even if it’s a bit repetitive—you can get far better results than those who rely on ad hoc prompting and hope for the best.

If you’re curious about how vibe coding can help accelerate your prototype, validate your use case, or assess market potential, we’d love to hear from you. Reach out through our channels, and let’s explore how AI-assisted development can work for your team.