Explore with Torsten Reidt, AI Engineer of The Cactai Team, the fascinating world of artificial intelligence in this interesting article. Discover the pivotal moments that have shaped the field of AI and explore the significant role Generative AI plays in today’s technological landscape. Whether you’re an AI enthusiast or simply curious about the evolution of this transformative technology, this comprehensive overview offers valuable insights into the past, present, and future of AI.

In today’s digital age, it’s almost impossible to escape the buzz surrounding Artificial Intelligence (AI). A quick internet search yields millions of new entries daily. The development of this technology is going at a breathtaking pace. Before diving into the current state of the art, I actually would like to take a step back and start with a look at the history of AI.

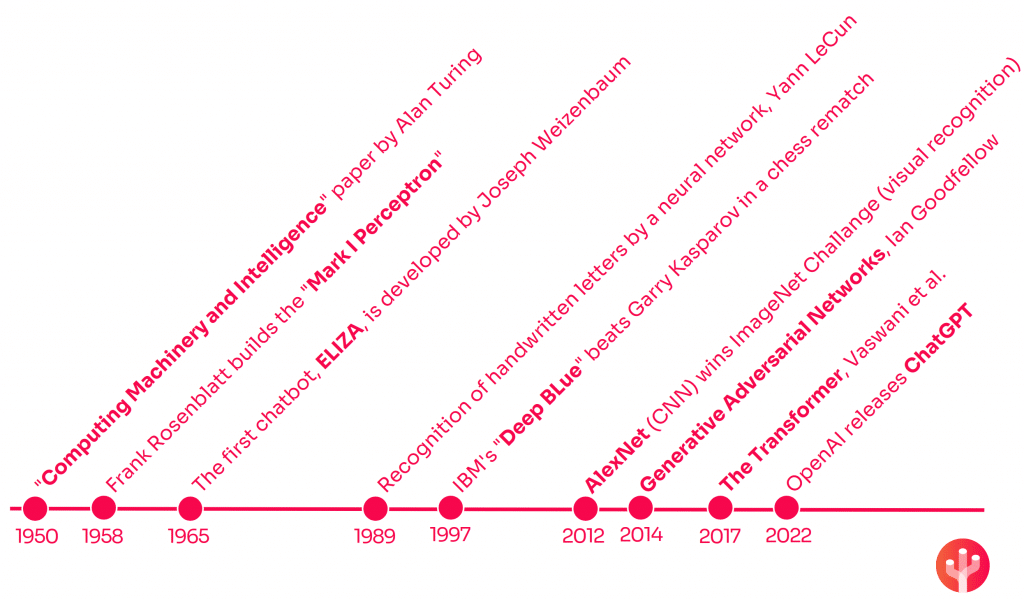

About 70 years ago, in the year 1956 to be exact, Alan Turing’s paper “Computing Machinery and Intelligence” was published. Even though the term “Artificial Intelligence” was not mentioned explicitly, Turing raised interesting questions and thoughts. The term “Artificial Intelligence” itself was coined in 1956 in the Dartmouth Summer Research Project.

Ever since then, there has been active research in the academic field of Artificial Intelligence. The trajectory has not been linear, however. Waves of excitement (increased research funds) were followed by so-called “AI Winters” (decreased research funds). The list of achievements along the way is quite long, therefore I’ll highlight only some of the milestones without diminishing the importance of other, not mentioned, achievements.

Now that we have seen that the development had its ups and downs over the last 70 years, what led to the current AI boom? Why is everyone talking about this technology nowadays? I would argue, that breakthrough developments in a single sub-domain of AI is the reason for that: Generative AI.

Generative AI refers to a type of artificial intelligence that leverages machine learning algorithms to generate new content such as images or text. During the training phase, these algorithms analyze massive datasets identifying patterns, structures, and features within the data. The learned structures are then used to create new content.

While the possibility of generating images with Generative Adversarial Networks may have gone under the radar of the broad public, the release of chatGPT by OpenAI was quite disruptive. Maybe the ability to understand and respond to natural language has humanized AI in a certain way or maybe it was simply the first time the broad public had access to an AI system via a easy to use user interface- whatever it was, but chatGPT surely changed the public perception of AI.

A lot of times when AI is mentioned nowadays, people are thinking mainly on systems which take text as input (a question) and return text as output (the answer). The models which power these kind of applications are called “Large Language Models” (LLM). The earlier described application is also known as “chatbot”. Other tasks where LLMs shine are summarizing text, text classification or sentiment analysis.

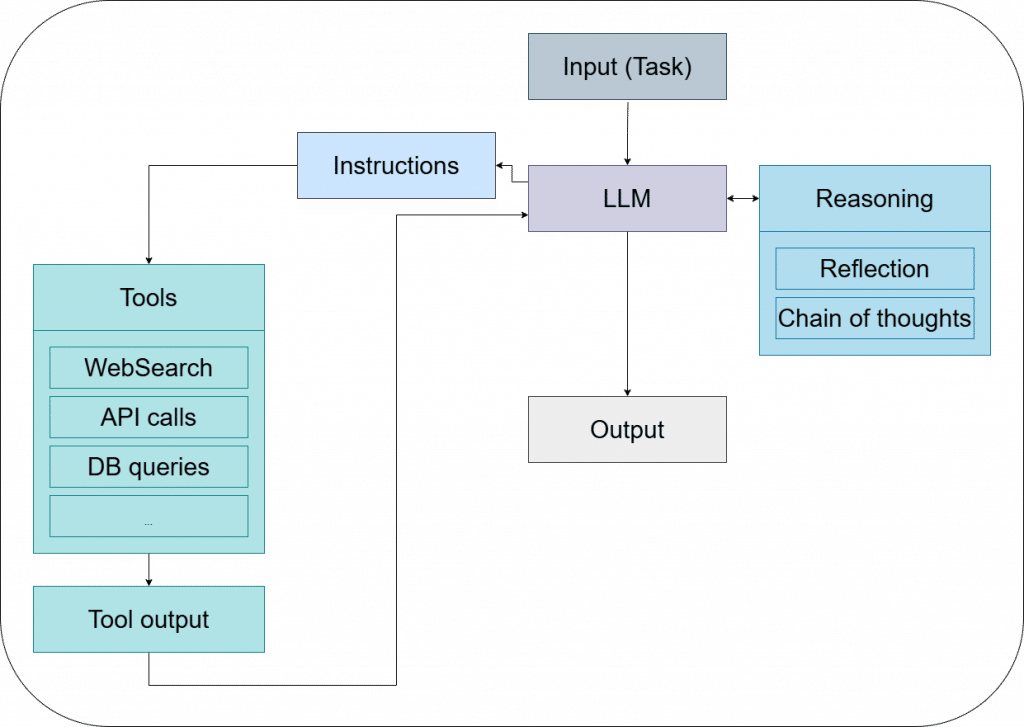

The most interesting and recent application for LLMs is in my opinion Agentic Systems. An Agentic System consists of a single or various agents. Each agent is powered by an LLM which enables the system to be goal orientated, to have a certain level of autonomy, being capable of reasoning and decision-making. While LLMs are bound to text input/output, an agent often integrates with external tools, databases or APIs to extend its capabilities beyond text generation. A scheme of an Agentic System (single agent) is shown in the following figure.

You can think of the LLM in this context as the agent’s brain. The right-hand side of the figure describes how the LLM should act. If the Agent was a human, you would describe this roughly as a “way of thinking”. The left-hand side of the figure shows some examples of Tools the Agent can use. At this point, it is important to remember, that LLMs can take text as input and create text as output only. Consequently, the LLM creates instructions on which Tool to use with which function arguments. The function call itself is done programmatically with a third-party module. The results of the function call (if any) are then returned to the LLM and processed further. As for the Tools itself- the imagination sets the limits. What I mean by that is, that you can implement any functionality you need. This may be an API call, a database query or even an interaction with your Operating System.

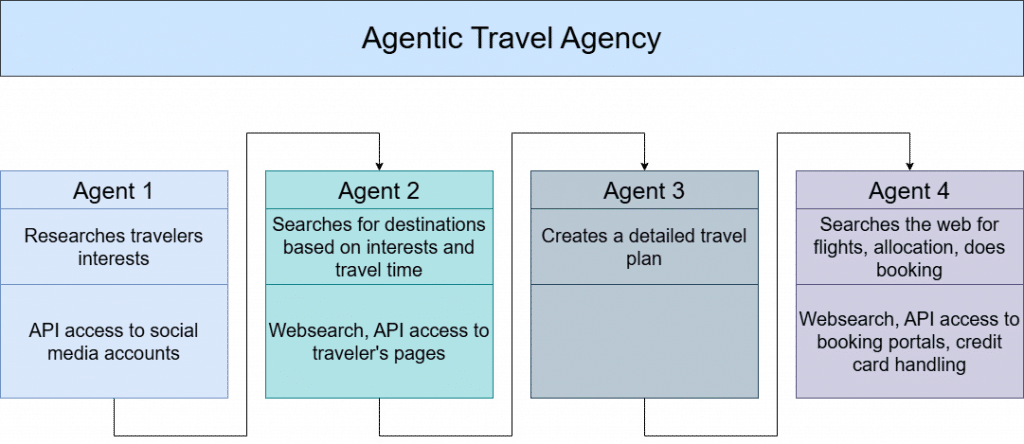

To give an example of where an Agentic System could be used to automate processes, I imagined an Agentic Travel Agency. I use a system of various agents which process the task of planning, and booking a holiday trip sequentially. The following figure shows what this system might look like.

While the above example could suggest that the setup of an Agentic System is an easy task, I want to highlight, that this is not necessarily the case. For a reliable Agentic System in a production environment, one will have to deal with the non-deterministic nature of LLMs, changing input prompts or even changes in LLM versions, to name a few challenges.

As I mentioned earlier, natural language-based systems usually come into mind when discussing AI. However, there are many other interesting areas within Artificial Intelligence such as Speech Recognition, Music Generation or Computer Vision. For the latter, I would like to highlight the subdomain Object Detection.

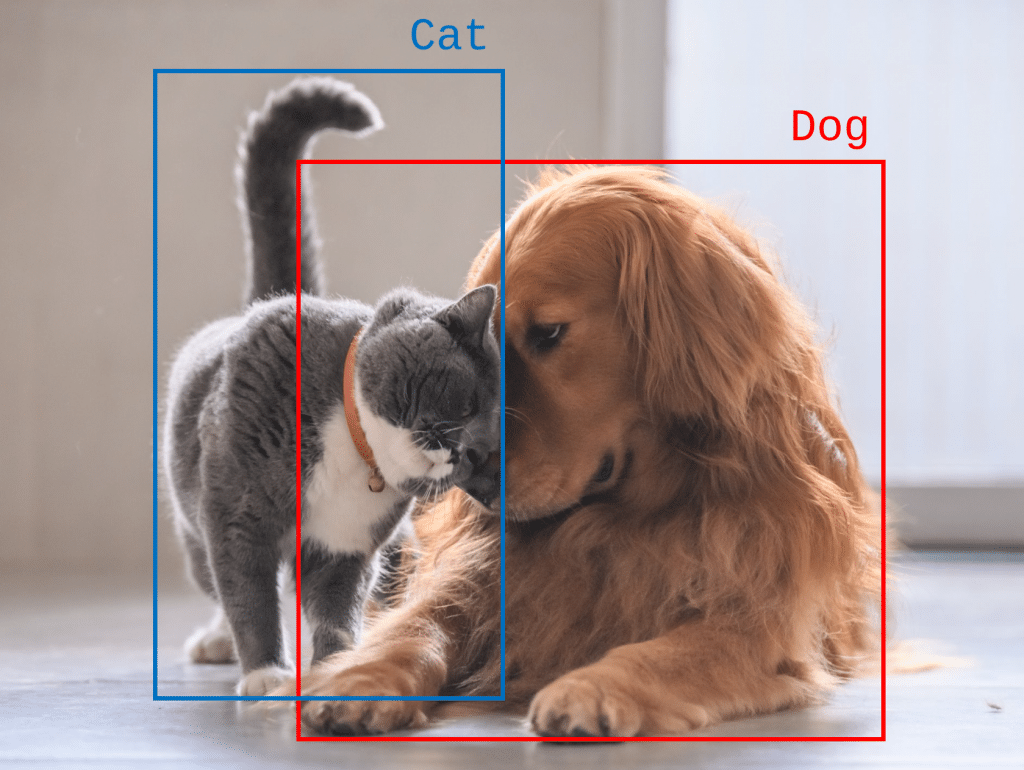

While around for some time, Object Detection gained importance due to its application in industries such as medical imaging, surveillance systems or self-driving cars. The primary tasks involved in Object Detection are localizing and classifying objects in images or video. The following images illustrate typical results of Object Detection where two objects have been localized (marked with a bounding box) and classified (labelled with text) in a single image. When applied to videos, Object Detection enables the tracking of objects from one frame to the next.

If you want to apply Object Detection, you usually don’t need to start from scratch as there are pre-trained open-source models available such as YOLO (You Only Look Once), Faster R-CNN or RetinaNet while YOLO is arguably the most popular one.

Both highlighted systems, the Agentic Systems using Large Language Models and Object Detection using YOLO, are just two introductory examples of AI applications. Many more AI systems are influencing our everyday lives already. Just think of NETFLIX recommending your next movie, the SPAM filter in your email account or Google tailoring advertisements to your preferences. These impressive, though specialized, capabilities could lead us after a while of thinking to the initial question of this blog post:

Are the machines rising?

I would argue that they are not, at least not yet. Current AI systems are designed and trained to perform well on very specific tasks such as text generation or Object Detection in images. This specialization limits their versatility in other domains. To overcome this situation, there is a significant development in combining individual capabilities into Multimodal Artificial Intelligence models. This new class of models can process different types of input such as images, video or text. Some examples are OpenAI’s GPT-4o (combines any combination of text, images or speech as input), kytai’s moshi (combines text and speech input), and LLaVA (combines image and text input).

The development of Multimodal Artificial Intelligence is seen by many as a crucial step towards achieving Artificial General Intelligence (AGI), which aims to match or even surpass human capabilities across a wide range of cognitive tasks.

Despite these advancements, all currently existing models, including the Multimodal models, still fall short in several key areas, including:

- Human-like reasoning and problem-solving

- Generalization across domains

- Capability to adapt to new situations

- Real time learning and understanding

- Context understanding

- Consciousness and self-awareness

- Creativity and Innovation

Only by addressing these challenges, we might witness an artificial system which comes close to human intelligence. Researchers and big tech companies like Microsoft, Google, Meta, OpenAI or Amazon are likely to pursue this path. Even if they do not succeed, there will be many new stepstones to highlight in future blog posts.

What´s next?

While the machines are not rising yet, AI development is progressing at a fast pace. Agentic Systems in combination with Multimodal models are creating opportunities for streamlining processes, automating tasks, and generating new business opportunities. At Cactus, we are exploring all these possibilities while keeping in mind critical issues such as data privacy, ethics and potential biases. As we move forward, we will continue to monitor these developments and share new milestones in future blog posts.